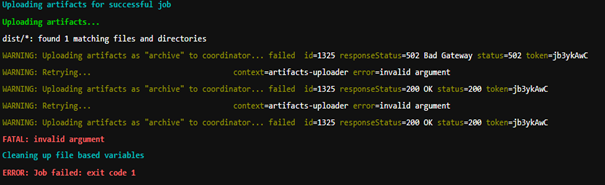

While running build job in Gitlab CI/CD, atifacts uploading failed.

Expected: gitlab artifacts uploads works normally.

Current Behaviour: gitlab artifcats uploads fails most of the times

ALthough it works sometimes:

Logs:

[root@srvxdocker01 gitlab-workhorse]# tail -f current| grep “error”

{“correlation_id”:“01EZSFN4ZWRMDMMPVZ9BTYVY55”,“error”:“handleFileUploads: extract files from multipart: persisting multipart file: unexpected EOF”,“level”:“error”,“method”:“POST”,“msg”:“error”,“time”:“2021-03-02T12:47:24Z”,“uri”:"/api/v4/jobs/1337/artifacts?artifact_format=zip\u0026artifact_type=archive"}

{“correlation_id”:“01EZSFN68Y4F3JA1WR62WQ14TZ”,“error”:“handleFileUploads: extract files from multipart: persisting multipart file: unexpected EOF”,“level”:“error”,“method”:“POST”,“msg”:“error”,“time”:“2021-03-02T12:47:25Z”,“uri”:"/api/v4/jobs/1337/artifacts?artifact_format=zip\u0026artifact_type=archive"}

{“correlation_id”:“01EZSFN87NGZCF4GDYKQMV2VVR”,“error”:“handleFileUploads: extract files from multipart: persisting multipart file: unexpected EOF”,“level”:“error”,“method”:“POST”,“msg”:“error”,“time”:“2021-03-02T12:47:27Z”,“uri”:"/api/v4/jobs/1337/artifacts?artifact_format=zip\u0026artifact_type=archive"}

[root@srvxdocker01 gitlab-workhorse]# cat current| grep 01EZSFN4ZWRMDMMPVZ9BTYVY55

{“correlation_id”:“01EZSFN4ZWRMDMMPVZ9BTYVY55”,“error”:“handleFileUploads: extract files from multipart: persisting multipart file: unexpected EOF”,“level”:“error”,“method”:“POST”,“msg”:“error”,“time”:“2021-03-02T12:47:24Z”,“uri”:"/api/v4/jobs/1337/artifacts?artifact_format=zip\u0026artifact_type=archive"}

{“content_type”:“text/plain; charset=utf-8”,“correlation_id”:“01EZSFN4ZWRMDMMPVZ9BTYVY55”,“duration_ms”:206,“host”:“my_host”,“level”:“info”,“method”:“POST”,“msg”:“access”,“proto”:“HTTP/1.1”,“referrer”:"",“remote_addr”:"ip",“remote_ip”:"ip",“route”:"^/api/v4/jobs/[0-9]+/artifacts\z",“status”:500,“system”:“http”,“time”:“2021-03-02T12:47:24Z”,“ttfb_ms”:205,“uri”:"/api/v4/jobs/1337/artifacts?artifact_format=zip\u0026artifact_type=archive",“user_agent”:“gitlab-runner 13.7.0 (13-7-stable; go1.13.8; linux/amd64)”,“written_bytes”:22}

Versions:

Gitlab Version :13.7.5

Gitlab runner Version: 13.7.0

snip of .gitlab-ci.yml :

build_job:

stage: build

script:

- echo "Building python library & wheel"

- echo "Test for Build again-29"

- python3 setup.py bdist_wheel

artifacts:

paths:

- dist/*whl

cat /etc/gitlab-runner/config.toml:

[[runners]]

name = "runner01

url = "my_host"

token = "my_token"

executor = "docker"

[runners.custom_build_dir]

[runners.cache]

[runners.cache.s3]

[runners.cache.gcs]

[runners.cache.azure]

[runners.docker]

tls_verify = false

image = "docker:latest"

privileged = false

disable_entrypoint_overwrite = false

oom_kill_disable = false

disable_cache = false

volumes = ["/cache"]

shm_size = 0

I already tried the followiing:

Updated Gitlab from 12.7.0 to 13.7.5 and gitlab-runner 13.7

Also tested with Gitlab-runner of different versions: 12.9, 13.8

Thanks in advance.