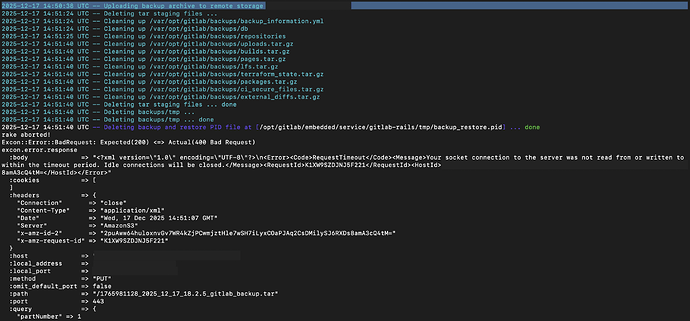

By reviewing the backups settings of my GitLab community edition 18.2.X (omnibus instance) installed in a EC2 on AWS. The backups files are accumulating uncontrollably fully the storage of the server. By running a manual backup, I could see in the standard output a problem related with performance (not related to configuration). The backups files have grown to approximately ~9GB. The GitLab’s native uploader (Fog) maybe is experiencing TimeOuts when attempting to send them to a S3 bucket. So, when the upload fails, the process stops before executing the file cleanup step. That’s why they are accumulating uncontrollably:

2025-12-17 14:50:38 UTC – Uploading backup archive to remote storage s3-backup …

2025-12-17 14:51:24 UTC – Deleting tar staging files …

2025-12-17 14:51:24 UTC – Cleaning up /var/opt/gitlab/backups/backup_information.yml

2025-12-17 14:51:24 UTC – Cleaning up /var/opt/gitlab/backups/db

2025-12-17 14:51:25 UTC – Cleaning up /var/opt/gitlab/backups/repositories

2025-12-17 14:51:40 UTC – Cleaning up /var/opt/gitlab/backups/uploads.tar.gz

2025-12-17 14:51:40 UTC – Cleaning up /var/opt/gitlab/backups/builds.tar.gz

2025-12-17 14:51:40 UTC – Cleaning up /var/opt/gitlab/backups/pages.tar.gz

2025-12-17 14:51:40 UTC – Cleaning up /var/opt/gitlab/backups/1fs.tar.gz

2025-12-17 14:51:40 UTC – Cleaning up /var/opt/gitlab/backups/terraform_state.tar.gz

2025-12-17 14:51:40 UTC – Cleaning up /var/opt/gitlab/backups/packages.tar.gz

2025-12-17 14:51:40 UTC – Cleaning up /var/opt/gitlab/backups/ci_secure_files.tar.gz

2025-12-17 14:51:40 UTC – Cleaning up /var/opt/gitlab/backups/external_diffs.tar.gz

2025-12-17 14:51:40 UTC – Deleting tar staging files … done

2025-12-17 14:51:40 UTC – Deleting backups/tmp …

2025-12-17 14:51:40 UTC – Deleting backups/tmp … done

2025-12-17 14:51:40 UTC – Deleting backup and restore PID file at [/opt/gitlab/embedded/service/gitlab-rails/tmp/backup_restore.pid] … done

rake aborted!

Excon::Error::BadRequest: Expected(200) <=> Actual(400 Bad Request)

excon.error.response

Is there a way to configure gitlab to avoid this behavior? I wish to keep 7 days backups locally (on the server) and 30 days in the S3 bucket.

Steps to reproduce

/opt/gitlab/bin/gitlab-backup create SKIP=artifacts

Configuration

gitlab_rails['backup_keep_time'] = 2592000

gitlab_rails['backup_upload_connection'] = {

'provider' => 'AWS',

'region' => 'us-east-1',

'use_iam_profile' => true

}

gitlab_rails['backup_upload_remote_directory'] = 's3-backup'

# gitlab_rails['backup_multipart_chunk_size'] = 104857600

# gitlab_rails['backup_storage_class'] = 'STANDARD'

Versions

Please add an x whether options apply, and add the version information.

- X Self-managed

-

GitLab.comSaaS - Dedicated

Version

- GitLab 18.2.5 Community Edition