Hello,

So I’ve recently created a little test environment, just to mess around with creating a pipeline.

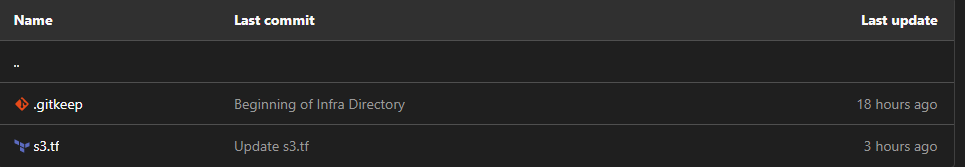

I have my .gitlab-ci.yml file in the root of my directory, and if I add a new file, s3.tf for example, with the necessary code to create an S3 Bucket in AWS, I can run my pipeline and it will deploy the infrastructure with no problem at all. However, I’d like to expand this project at some point, so I would prefer to put all of my ‘infrastructure’ files into a directory called ‘infra’.

Therefore, I created a ‘infra’ directory within the project, and made a new terraform file to deploy different AWS infrastructure, but the pipeline doesn’t seem to be able to detect this directory or any of the files in it. I’m probably missing something really stupid but any help would be great! Thanks

Contents of .gitlab-ci.yml:

image: registry.gitlab.com/gitlab-org/terraform-images/stable:latest

variables:

TF_ROOT: ${CI_PROJECT_DIR}/

TF_ADDRESS: ${CI_API_V4_URL}/projects/${CI_PROJECT_ID}/terraform/state/tf-state

cache:

key: example-production

paths:

- ${TF_ROOT}/.terraform

before_script:

- cd ${TF_ROOT}

stages:

- prepare

- validate

- build

- deploy

init:

stage: prepare

script:

- gitlab-terraform init

validate:

stage: validate

variables:

TF_VAR_AWS_ACCESS_KEY_ID: ${AWS_ACCESS_KEY_ID}

TF_VAR_AWS_SECRET_ACCESS_KEY: ${AWS_SECRET_ACCESS_KEY}

TF_VAR_AWS_DEFAULT_REGION: ${AWS_DEFAULT_REGION}

script:

- gitlab-terraform validate

plan:

stage: build

variables:

TF_VAR_AWS_ACCESS_KEY_ID: ${AWS_ACCESS_KEY_ID}

TF_VAR_AWS_SECRET_ACCESS_KEY: ${AWS_SECRET_ACCESS_KEY}

TF_VAR_AWS_DEFAULT_REGION: ${AWS_DEFAULT_REGION}

script:

- gitlab-terraform plan

- gitlab-terraform plan-json

artifacts:

name: plan

paths:

- ${TF_ROOT}/plan.cache

reports:

terraform: ${TF_ROOT}/plan.json

apply:

stage: deploy

variables:

TF_VAR_AWS_ACCESS_KEY_ID: ${AWS_ACCESS_KEY_ID}

TF_VAR_AWS_SECRET_ACCESS_KEY: ${AWS_SECRET_ACCESS_KEY}

TF_VAR_AWS_DEFAULT_REGION: ${AWS_DEFAULT_REGION}

environment:

name: production

script:

- gitlab-terraform apply

dependencies:

- plan

when: manual

only:

- main