hendry

October 6, 2022, 8:12am

1

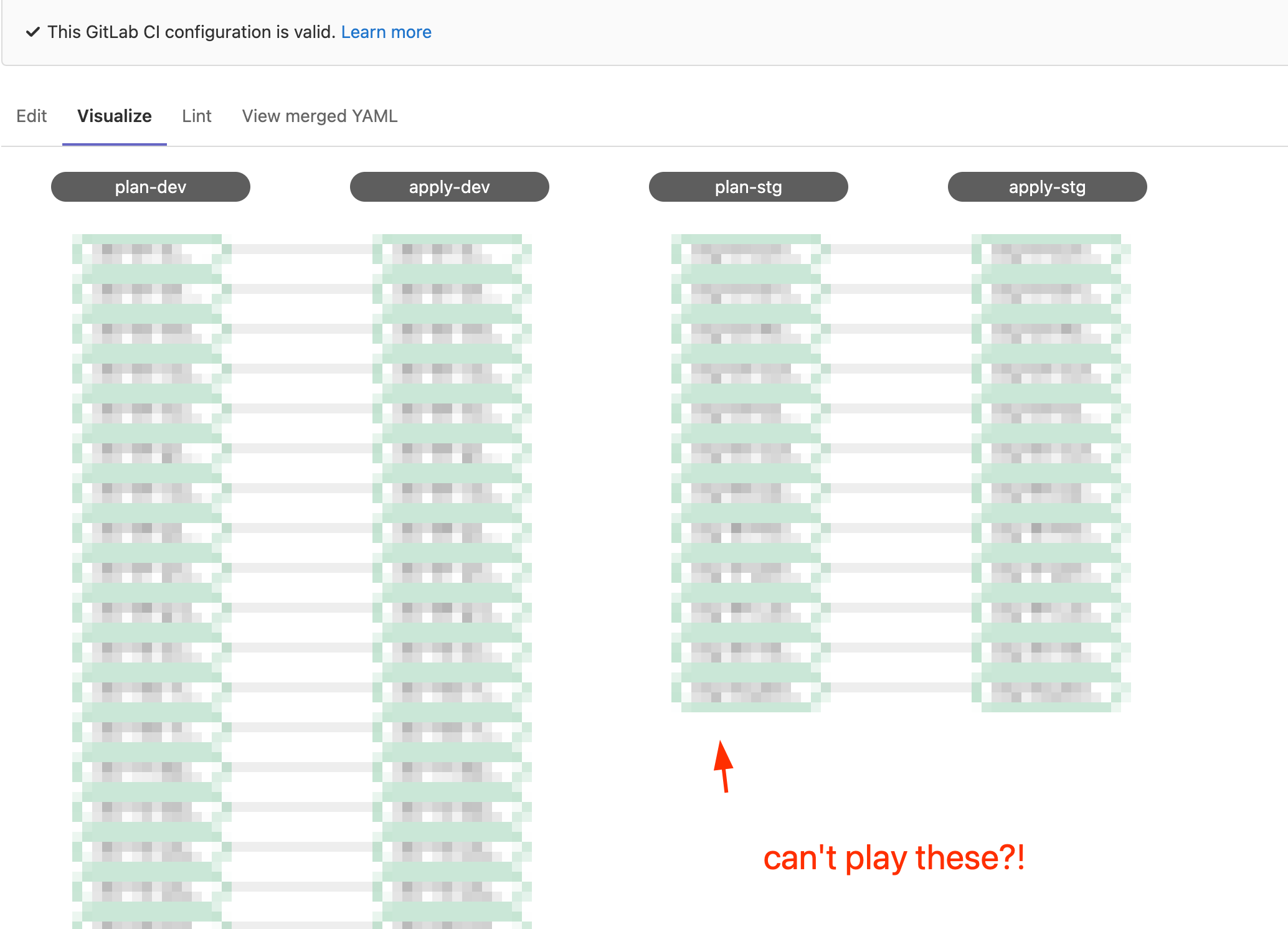

I have a downstream pipeline on self-hosted 14.0.12-ee that generates something like:

stages:

- plan-dev

- apply-dev

- plan-stg

- apply-stg

- plan-prd

- apply-prd

Accross a bunch of stacks. Oddly I can plan-dev, and apply-dev on any stack but the next sage plan-stg job which looks like:

stg-16-foobar:plan:

stage: plan-stg

script:

- cd stacks/backend/16-foobar

- make -f $CI_PROJECT_DIR/Makefile init env=stg account=backend

- make -f $CI_PROJECT_DIR/Makefile plan

rules:

- when: manual

allow_failure: true

It is created , but I don’t see a Play button!? This job has not been triggered yet What am I missing please?

@hendry is this what appears when you view the pipeline as well from the pipeline detail page?

I don’t believe the play button appears in the Visualize rendering of the pipeline editor based on my test on gitlab.com .

-James H, GitLab Product Manager, Verify:Pipeline Execution

hendry

October 14, 2022, 2:11am

3

Sorry I may have used the Visualise thing to demonstrate the issue. Here is something more concrete looking in my dynamic pipeline:

When I hover over the stg-01-acm … that I want to run it just says “Created”. I can’t trigger it and I don’t know why!

do those downstream jobs have any needs: relationship with the upstream jobs maybe?

This is a really simple example but shows play buttons for all jobs in all stages with a .gitlab-ci.yml that’s roughly like yours.

If you can share some more of your gitlab-ci.yml it may help troubleshooting.

-James H, GitLab Product Manager, Verify:Pipeline Execution

hendry

October 14, 2022, 2:46am

5

There no needs, does it need needs in order just to manually run?

stg-01-acm-internal:plan:

stage: plan-stg

variables:

TF_PROJECT_DIR: stacks/infra/01-acm-internal

PLAN_JSON: $CI_PROJECT_DIR/stacks/infra/01-acm-internal/plan.json

script:

- cd stacks/infra/01-acm-internal

- rm .terraform/terraform.tfstate || true

- CREDS=$(aws sts assume-role --role-arn

arn:aws:iam::XXXXXXXXX:role/InfraGitlabRunner

--role-session-name Gitlab)

- export AWS_ACCESS_KEY_ID=$(echo $CREDS | jq -r '.Credentials.AccessKeyId')

- export AWS_SECRET_ACCESS_KEY=$(echo $CREDS | jq -r

'.Credentials.SecretAccessKey')

- export AWS_SESSION_TOKEN=$(echo $CREDS | jq -r '.Credentials.SessionToken')

- aws sts get-caller-identity

- make -f $CI_PROJECT_DIR/Makefile init env=stg account=infra

- make -f $CI_PROJECT_DIR/Makefile plan

- terraform show --json tfplan | convert_report > $PLAN_JSON

artifacts:

paths:

- $TF_PROJECT_DIR/tfplan

- $TF_PROJECT_DIR/.terraform

- $TF_PROJECT_DIR/.terraform.lock.hcl

reports:

terraform: $PLAN_JSON

rules:

- when: manual

allow_failure: true

You should not have to add ‘needs’ but if you do be aware of how it may impact the pipeline .

I’d guess there was a bug (I can’t find any issues in my quick search) that was fixed sometime between the version you are running (14.0.12) and what is on gitlab.com (15.4) that is causing this.

If you can replicate this on gitlab.com could you add me to the project to take a look? Thanks!

-James H, GitLab Product Manager, Verify:Pipeline Execution

hendry

October 14, 2022, 11:45am

7

Need did the trick btw! Thank you and have a great weekend.

1 Like